IssueWarningQuote / Exchange Online Mailbox Quota Alerts?

Good morning.

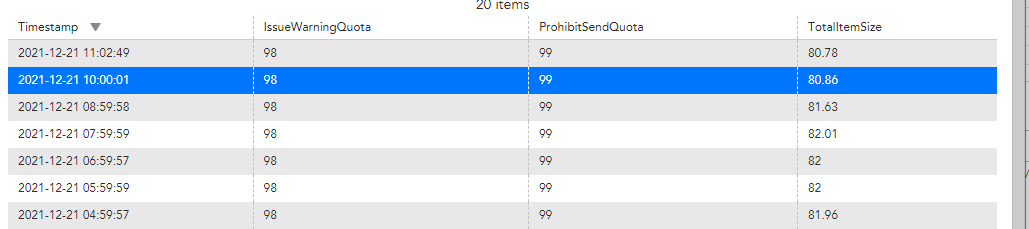

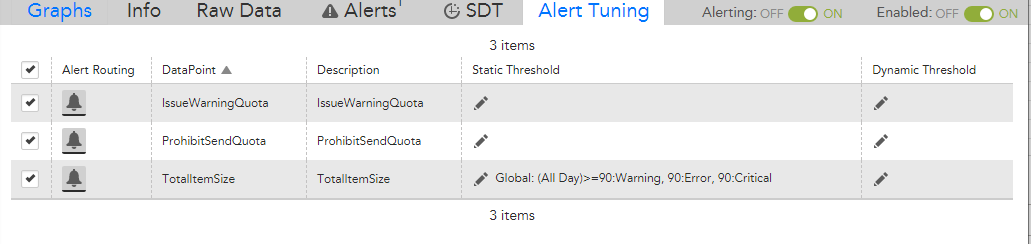

We are currently using the O365 SaaS connector. While this is a nice connector, it lacks in any detailed monitoring such as individual mailbox activity/stats. There is one metric I am looking to manage via LM, which is the "IssueWarningQuota" mailbox status. I would like to know when a mailbox has consumed more space than the "IssueWarningQuota". This would allow technology to be made aware when a user's mailbox is getting to the point where we need to ask them to archive or delete, etc. It's also a very critical metric for managing service account mailboxes...which if they hit the "ProhibitSendReceiveQuota" could cause production issues....

This is not available in the SaaS product. I am wondering if anyone else has successfully done this in logic monitor. I know we can run powershell with custom datapoints. But I also am not quite sure yet how to correctly grab mailbox sizes and check them against IssueWarningQuota.....any ideas?