6 years ago

How to stagger datasource instance collection?

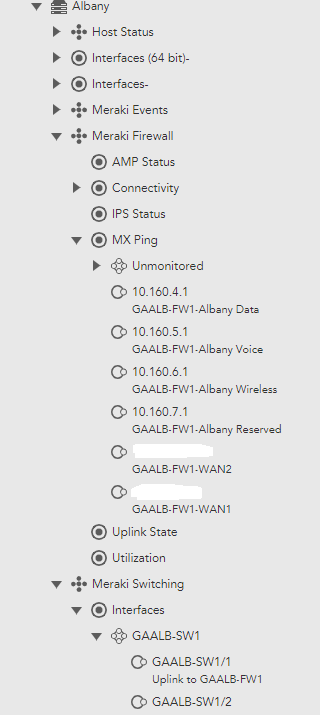

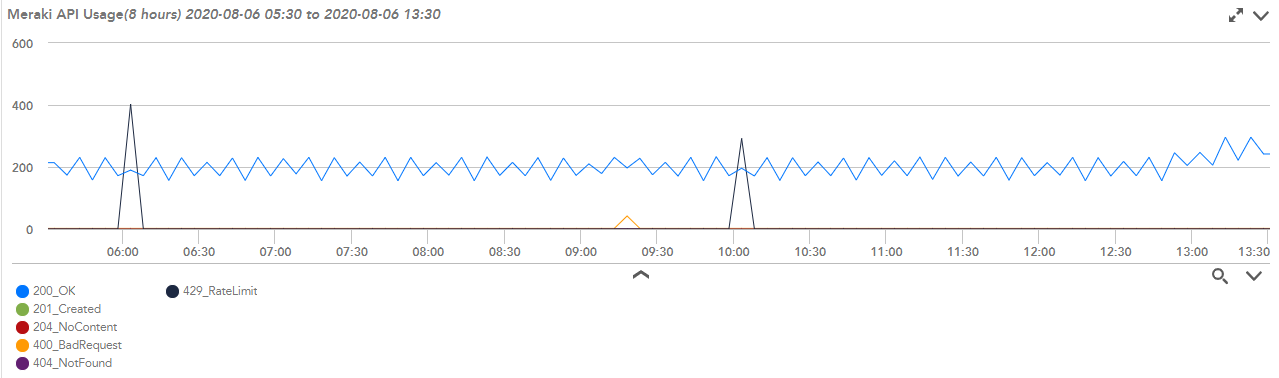

I'm working with custom Meraki API datasources and have an issue where the collector can get into a state such that all the instances attempt to collect simultaneously. Or, at least closely enough together it triggers Meraki's rate limiting, and even my backoff/retry isn't doing the job.

I would think that if my collector script for my customer datasource is set to sleep for a rand() number of seconds, I should be able to avoid this. Any thoughts about what I might be doing wrong, or is there "LogicMonitor way" I should be handling this?

The below is what I'm doing to try to account for 429 Rate Limit responses. (Not saying this is 'correct' in any way, but I thought the retry logic should've worked.)

def getAPIQueryOutput(String api_uri) { url = 'https://api.meraki.com' + api_uri req = getHTTPResponse(url) // Got it the first try if(req.responseCode == 200){ return new JsonSlurper().parseText(req.inputStream.getText('UTF-8')) } else if(req.responseCode == 204) { // Entry exists but did not have data. return null } else if(req.responseCode == 400) { // Bad request. return null } else if(req.responseCode == 404) { // Whatever we tried to find didn't exist. Return null. return null } // 429 received due to rate limiting, backoff and try again. count = 1 while(req.responseCode == 429 && count < 4){ backoff = getBackOffMs(count) // Wait for increasing amount of time. sleep(backoff) req = getHTTPResponse(url) count++ } // Return whatever we ended up with. return new JsonSlurper().parseText(req.inputStream.getText('UTF-8')) } def getBackOffMs(count){ // Get a random int between 1 - 3 inclusive. backoff_seed = new Random().nextInt(3) + 1 return backoff_seed * 1000 * count }