Has anybody noticed the flaw in LogSource logic?

So LogSources have a couple purposes:

- They allow you to filter out certain logs. I’m not sure the use case here since the LogSource is explicitly including logs. Maybe the point is to allow you to exclude certain logs that have sensitive data. No masking of data, just ignore the whole log. Not clear if the ignored logs are still in LMLogs or if they get dumped entirely.

- They allow you to add tags to logs. This is actually pretty cool. You can parse out important info from the log or add device proeprties to the logs. This means you can add device properties to the log that can be displayed as a separate column, or even filtered upon. Each of our devices has a customer property on it. This means i can add that property to each log and search or filter by customer. Device type, ssh.user, SKU, serial number, IP address, the list is literally endless.

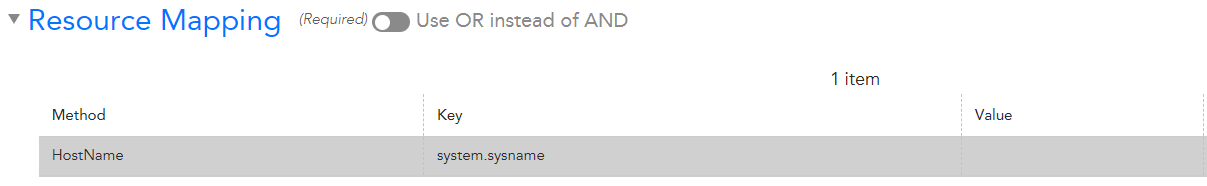

- They allow you to customize which device the logs get mapped to. You can specify that the incoming log should be matched to device via IP address, or hostname, or FQDN, or something else. The documentation on this isn’t exactly clear, but that actually doesn’t matter because…

The LogSources apply to logs from devices that match the LogSource’s AppliesTo. Which means the logs need to already be mapped to the device. Then the LogSource can map the logs to a certain device. Do you see the flaw? How is a log going to be processed by a LogSource so it can be properly mapped to a device, if the LogSource won’t process the log unless it matches the AppliesTo, which references to the device, to which the logs haven’t yet been mapped?

LogSources should not apply to devices. They should apply to Log Pipelines.

Hi

@Stuart Weenig Thank you for taking the time to share your feedback with us. We genuinely appreciate your insights into our system!

In your post, you’ve rightly identified a challenge we face when it comes to mapping logs to log sources. It’s indeed a bit of a chicken-and-egg situation. Allow me to clarify the reasoning behind our approach:

When a log is sent from a device to Logicmonitor and enters our processing pipeline, the question arises: how do we identify the source device? Is it by the message content, the header information, or the log format?

If we were to rely solely on the message content for identification, it would be a time-consuming and cumbersome process. This would mean having to account for log formats from a wide array of vendors, which can be quite complex.

Our solution is to identify logs by the device itself. Typically, devices of the same type tend to generate similar logs. As a result, grouping logs based on the device within the “appliesTo” section simplifies the process.

Trying to use “appliesTo” logic to match specific log formats, on the other hand, would be unwieldy and require complex regular expressions. Therefore, the first step is to properly identify the log’s source device before we can effectively take any actions.

Our goal is to properly identify a log to avoid things like improperly tagging or filtering. We find that identifying logs by the resource (device) makes the most sense in this context. Attempting to do this by pipeline would place us back in a similar situation, as pipelines are essentially groups of devices.

I hope this explanation clarifies our approach, and if you have any further questions or suggestions, please feel free to share them. Your input is invaluable as we continually strive to improve our system.

Thank you for being a part of our community.