Sample Rate Increase or high-water-mark report

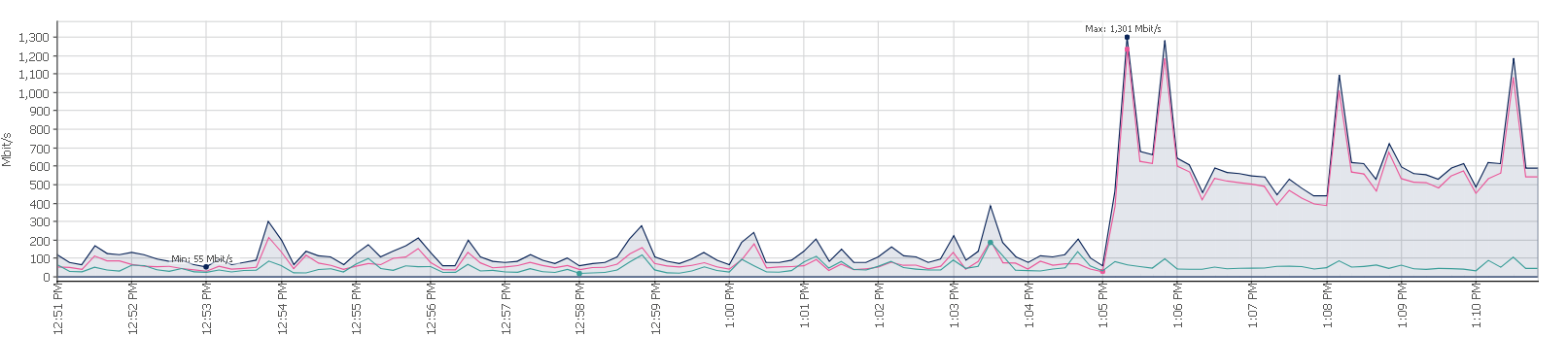

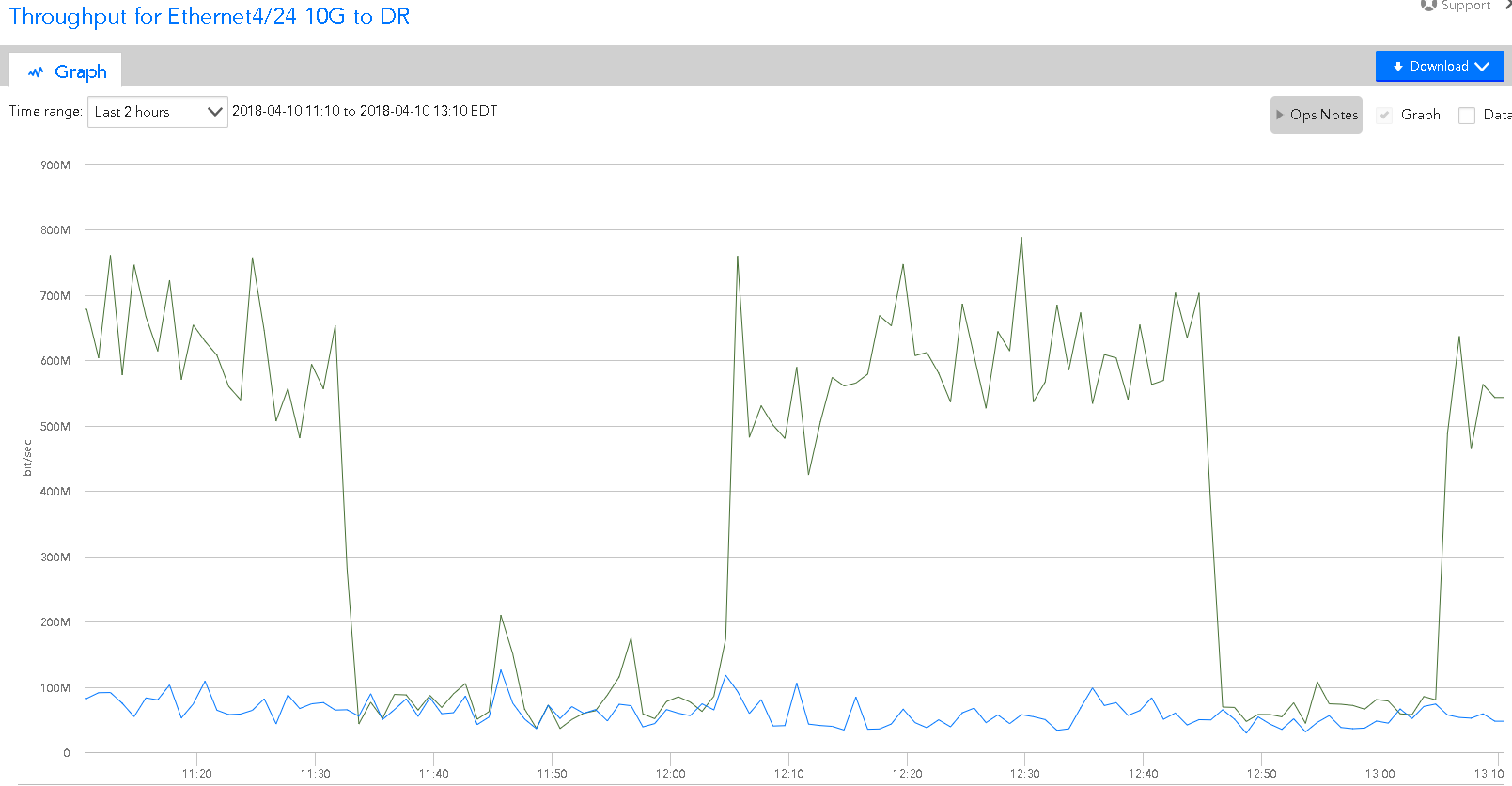

Default sample rate of 1 minute leads to misleading results, particularly on high bandwidth interfaces. There has to be a way to infer what happens on a 10G interface in-between polls; instead of reading only the bit rate during poll, is there any way to compare total bytes/packets sent between polls, and use the difference to plot estimated bit rates that would be required to account the discrepancy? Please see the two attached graphs - one is logic monitor @ 1 minute polling rate and the other is a competitor @ 10 sec for the same interface. Although the logic monitor sample is 2 hours and the 'other' is 20 minutes, you get the idea. They tell two completely different stories. There has to be a better way to read and interpret the results from bursty traffic; we are preparing 100 G interfaces shortly and there is no way we can reliably monitor these with Logic Monitor unless there is some way to account for overall data transmitted and not just random polling interval results.