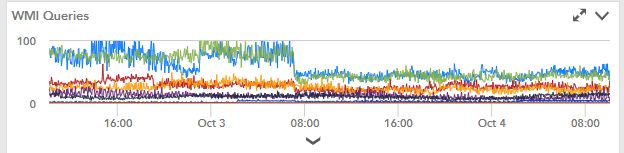

I've also lowered the timeout of the wmi calls to allow them to fail faster. They're bottle necking pretty hard... entirely based on available Ports and the close_wait timeout required by the TCP/IP spec. I've even gone so far as to triple the number of available ephemeral ports since the collectors we've got are used for nothing but LM. The problem is that LM doesn't seem to care if there are any ports available, it'll queue the requests no matter the state of the collector itself. Once they start timing out, they become a log jam that builds upon itself and brings the collection of metrics to a screeching halt. Since we use our data collection to prove our SLA to our customers, I have to alleviate that ... and balance it with the spend of extra VMs to increase that capacity.

We have no collector groups with > 250 devices... which is well under the capacity we were told they should be able to support. Since that doesn't seem to be the case, we're having to re-provision our VM Spend for the system and address our messaging to our customers ... we'd initially told them this change would reduce our need for extra VMs in their environments as we'd needed with SCOM (we can get into trust and security model discussions about open access vs. gateway'd single point of trust later). But those 200+ servers are choking out our two (really well balanced) collectors. So we're going to be forced to re-examine our deployment if I can't tune LM to account for the WMI load (heavily focused around HyperV and Clustered Resources).

I've reduced the frequency on nearly every WMI based 'Source we have as well as the script based ones that would use WMI to gather their data. I've easily cut the load in half from when I've started and it's still hitting the roof on the TCP ports. I've got it flowing pretty well and not dropping too much at this point, but I'll have to add more customers soon and it's going to add to the load on the collectors. (Not having to add the local resources is a sales point for us).

Ultimately, I'm just looking for other places I can trim the fat on this system to keep it within initial spec.

Professor

Professor Professor

Professor